Note: The following is a recount of an incident that occurred during my time as an SRE with a previous company. I do not have access to the original outage report and I am sharing this story based on my recollection. While I may not remember every detail with complete accuracy, the general sequence of events is described here to the best of my ability. Any errors or omissions are entirely my own. Also, for the sake of maintaining confidentiality, names of the company, product, tool, or any other identifiable information will not be included.

In one of my previous roles at a leading cloud-based company, I encountered a fascinating challenge that underscored the importance of resource management in Kubernetes. While I can’t reveal specific company details, I’ll share a fictionalized account of an incident that carries valuable lessons for anyone navigating the complexities of scaling in a Kubernetes environment.

The Scenario Link to heading

Picture this: a team is conducting a rigorous performance test, rapidly deploying numerous pods. Suddenly, an alert flares up, signaling trouble. The Open Policy Agent (OPA) service, responsible for enforcing policies across the environment, is struggling to keep up with the scale-up activity.

The Dilemma Link to heading

Upon investigation, it becomes apparent that the existing OPA replicas are insufficient for handling the entire cluster. Each replica is constrained with minimal memory resources. Faced with this challenge, two options emerge: either increase the number of OPA pods or boost the resource limits of the existing ones. To avoid complex configuration changes, the decision is made to enhance the memory limits.

The Solution Link to heading

As the revised configurations roll out, the issue is resolved. However, this incident sheds light on a crucial gap - the absence of tools to monitor resource utilization and identify which OPA policies are consuming the most resources.

Understanding Kubernetes Resource Management Link to heading

Let’s delve into the general principles of how Kubernetes manages resources for pods and containers.

-

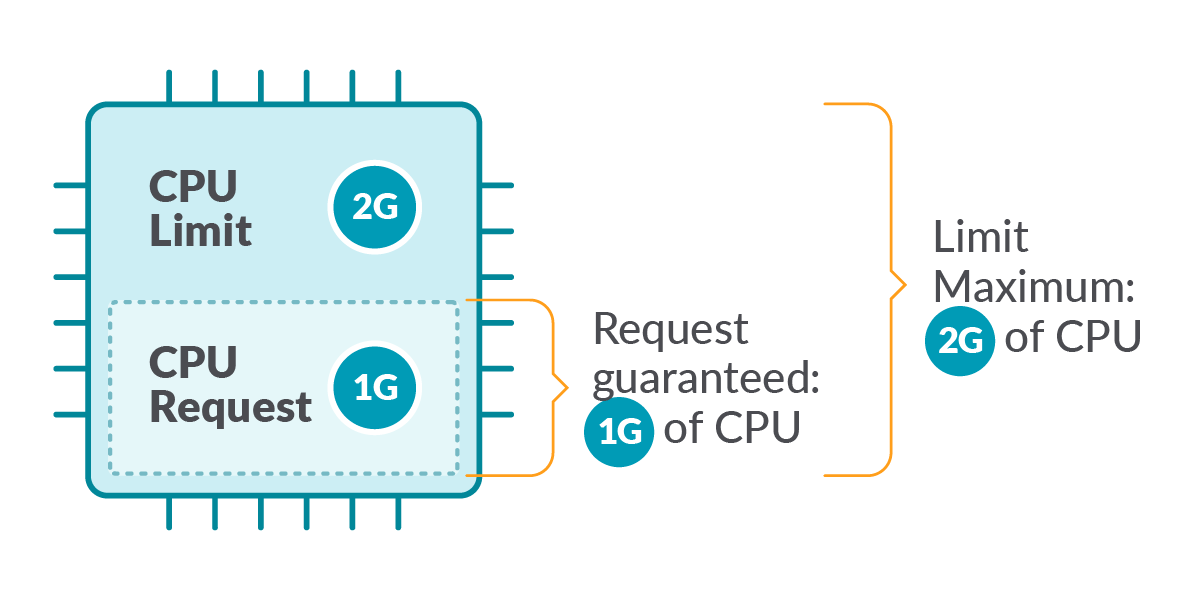

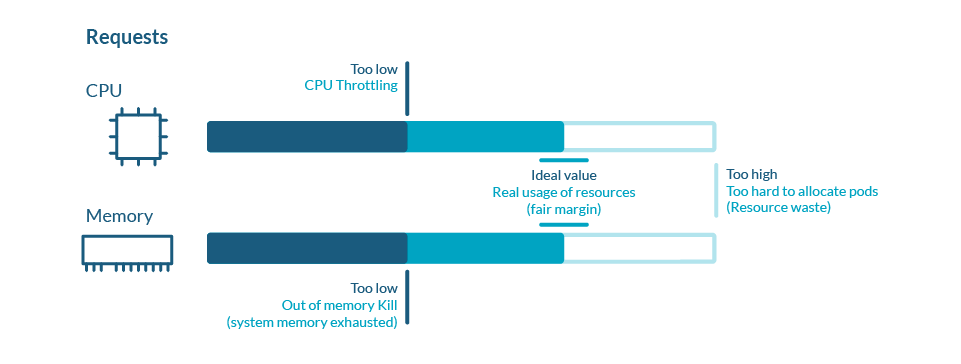

Resource Requests and Limits:

- Resource requests guide pod placement, aiding the scheduler in choosing the appropriate node.

- Resource limits ensure containers stay within specified resource usage, enforced by the node’s runtime.

-

CPU and Memory Resource Types:

- CPU units represent compute processing, measured in Kubernetes CPUs.

- Memory is specified in bytes, with various suffixes for expressiveness.

-

Resource Requests and Limits in Pods:

- For each container, specify resource limits and requests for CPU and memory.

- Pod resource request/limit is the sum of individual container requests/limits for each resource type.

-

CPU and Memory Resource Units:

- CPU units are absolute and equivalent to physical or virtual cores.

- Memory limits and requests are measured in bytes.

Applying Resource Requests and Limits Link to heading

- The kubelet passes container requests and limits to the container runtime.

- CPU limits define a hard ceiling, while CPU requests act as weightings.

- Memory requests aid in scheduling, and memory limits prevent excessive memory usage.

Image: CPU Requests and Limits

Image: CPU Requests and Limits

Image: How CPU and Memory requests are handled

Image: How CPU and Memory requests are handled

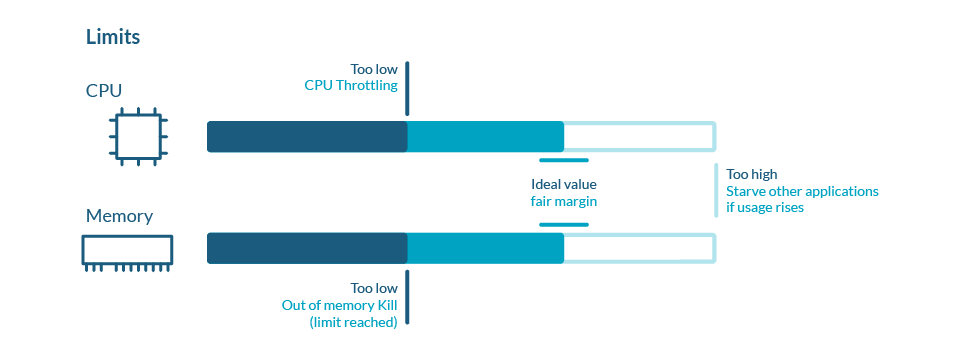

Handling Resource Exceedances Link to heading

-

Memory Limits:

- Exceeding memory limits triggers the system’s memory management, possibly leading to container restart.

- Termination due to memory limit excess is recorded in Kubernetes events and logs.

-

CPU Limits:

- Kubernetes doesn’t terminate or throttle containers for exceeding CPU limits.

- Containers may consume more CPU than specified if the system allows, with the CPU limit serving as a hard ceiling.

Image: How CPU and Memory limits are handled

Image: How CPU and Memory limits are handled

Monitoring Resource Usage Link to heading

- The kubelet reports resource usage as part of pod status.

- Monitoring tools or the Metrics API can retrieve pod resource usage for analysis.

Conclusion Link to heading

This journey through a fictionalized incident emphasizes the critical role of resource management in Kubernetes. Understanding and monitoring resource utilization is paramount for maintaining a resilient and efficient Kubernetes ecosystem. As you navigate the intricacies of scaling, keep these general principles in mind to ensure a smooth and efficient operation of your Kubernetes clusters.

Acknowledgement Link to heading

The images in this post have been sourced from this Sysdig blog.